Given the head of a sorted linked list, delete all nodes that have duplicate numbers, leaving only distinct numbers from the original list. Return the linked list sorted as well.

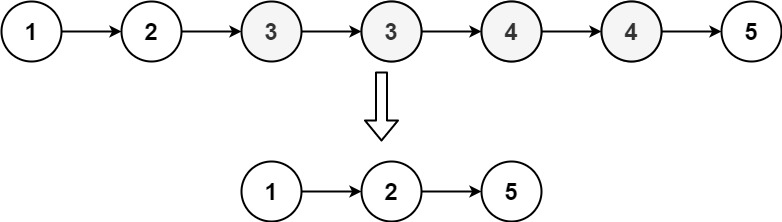

Example 1:

Input: head = [1,2,3,3,4,4,5] Output: [1,2,5]

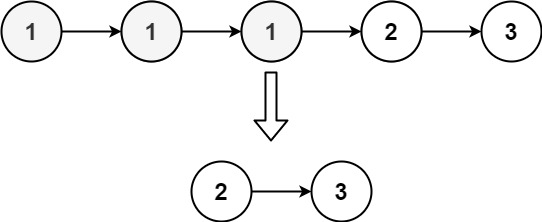

Example 2:

Input: head = [1,1,1,2,3] Output: [2,3]

Constraints:

[0, 300].-100 <= Node.val <= 100The core challenge of this problem is to remove all nodes that have duplicate values from a sorted linked list. The significance of this problem lies in its applications in data cleaning and preprocessing, where duplicates need to be removed to ensure data integrity. A common pitfall is to mistakenly remove only the duplicate nodes and not the original node that has duplicates.

To solve this problem, we need to traverse the linked list and identify nodes with duplicate values. A naive approach would involve nested loops, but this would be inefficient. Instead, we can use a single pass approach with a dummy node to handle edge cases more gracefully.

The naive solution involves using nested loops to compare each node with every other node, which results in a time complexity of O(n^2). This is not optimal for larger lists.

An optimized solution involves using a single pass with a dummy node. The dummy node helps in handling edge cases where the head itself might be a duplicate. We maintain a pointer to the previous node and check for duplicates as we traverse the list.

Here is a step-by-step breakdown of the optimized algorithm:

// Definition for singly-linked list.

struct ListNode {

int val;

ListNode *next;

ListNode() : val(0), next(nullptr) {}

ListNode(int x) : val(x), next(nullptr) {}

ListNode(int x, ListNode *next) : val(x), next(next) {}

};

class Solution {

public:

ListNode* deleteDuplicates(ListNode* head) {

// Create a dummy node to handle edge cases

ListNode* dummy = new ListNode(0, head);

ListNode* prev = dummy; // Previous node pointer

while (head != nullptr) {

// Check if the current node has a duplicate

if (head->next != nullptr && head->val == head->next->val) {

// Skip all nodes with the same value

while (head->next != nullptr && head->val == head->next->val) {

head = head->next;

}

// Link previous node to the node after the last duplicate

prev->next = head->next;

} else {

// Move the previous node pointer

prev = prev->next;

}

// Move to the next node

head = head->next;

}

// Return the new head of the list

return dummy->next;

}

};

The time complexity of the optimized solution is O(n), where n is the number of nodes in the list. This is because we traverse the list only once. The space complexity is O(1) as we are using a constant amount of extra space.

Potential edge cases include:

Each of these cases is handled by the algorithm, ensuring robustness.

To test the solution comprehensively, consider the following test cases:

Using a testing framework like Google Test can help automate and validate these test cases.

When approaching such problems, consider the following tips:

Practice solving similar problems and study different algorithms to improve problem-solving skills.

In this blog post, we discussed how to remove duplicates from a sorted linked list using an optimized approach. We covered the problem definition, approach, algorithm, code implementation, complexity analysis, edge cases, and testing. Understanding and solving such problems is crucial for developing strong problem-solving skills in programming.

For further reading and practice, consider the following resources:

Our interactive tutorials and AI-assisted learning will help you master problem-solving skills and teach you the algorithms to know for coding interviews.

Start Coding for FREE