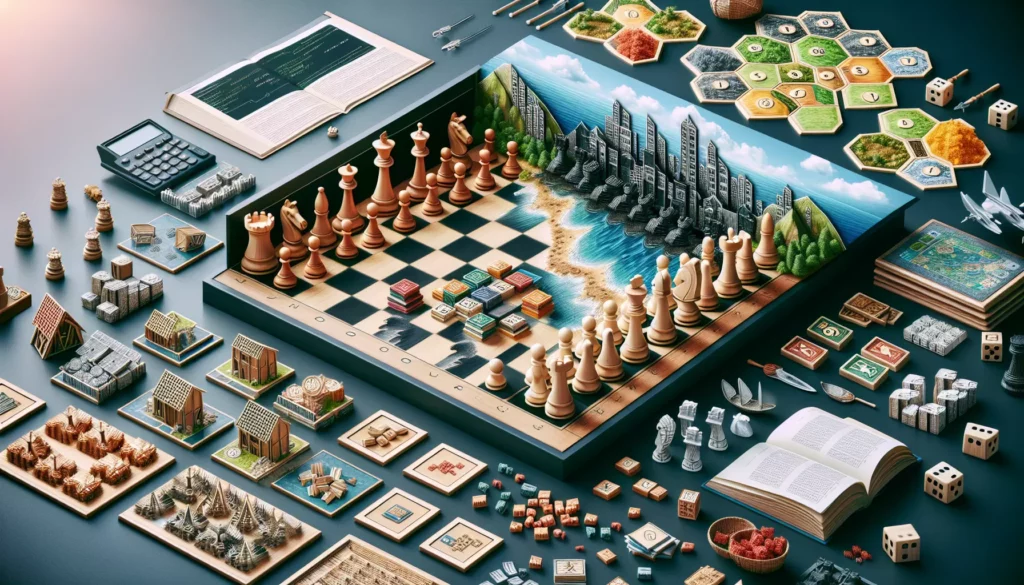

Learning Algorithms Through Board Games: From Chess to Settlers of Catan

In the world of computer science and programming, algorithms are the backbone of efficient problem-solving. These step-by-step procedures for solving complex problems are not just confined to the realm of coding; they’re all around us in our daily lives. One fascinating way to understand and appreciate algorithms is through the lens of board games. From the classic game of chess to the modern favorite Settlers of Catan, board games offer a tangible and engaging way to explore algorithmic thinking.

In this comprehensive guide, we’ll dive deep into how popular board games can help you grasp fundamental algorithmic concepts. We’ll explore how these games mirror real-world programming challenges and how the strategies employed in these games can be translated into coding practices. Whether you’re a beginner looking to understand basic algorithmic principles or an experienced programmer aiming to sharpen your problem-solving skills, this article will provide valuable insights and practical examples.

Table of Contents

- Chess: The Classic Algorithm Trainer

- Go: Mastering Complexity and Pattern Recognition

- Checkers: Understanding Tree Structures and Decision Making

- Monopoly: Probability and Resource Management Algorithms

- Settlers of Catan: Resource Optimization and Negotiation Algorithms

- Ticket to Ride: Graph Theory and Pathfinding Algorithms

- Pandemic: Collaborative Problem Solving and Optimization

- Scrabble: String Manipulation and Dictionary Algorithms

- Risk: Probability and Game Theory Algorithms

- Conclusion: From Board to Code

1. Chess: The Classic Algorithm Trainer

Chess, often called the “game of kings,” is perhaps the most well-known board game for developing strategic thinking. It’s also an excellent tool for understanding and practicing algorithmic concepts.

Minimax Algorithm

The minimax algorithm is a decision-making algorithm used in two-player games like chess. It aims to minimize the possible loss for a worst-case scenario. In chess, this translates to choosing moves that lead to the best possible outcome, assuming your opponent also plays optimally.

Here’s a simplified implementation of the minimax algorithm in Python:

def minimax(position, depth, maximizing_player):

if depth == 0 or game_over(position):

return evaluate(position)

if maximizing_player:

max_eval = float('-inf')

for move in get_possible_moves(position):

eval = minimax(make_move(position, move), depth - 1, False)

max_eval = max(max_eval, eval)

return max_eval

else:

min_eval = float('inf')

for move in get_possible_moves(position):

eval = minimax(make_move(position, move), depth - 1, True)

min_eval = min(min_eval, eval)

return min_evalAlpha-Beta Pruning

Alpha-beta pruning is an optimization technique for the minimax algorithm. It reduces the number of nodes evaluated in the search tree. This concept is crucial in chess engines and other game-playing algorithms.

Here’s how you might implement alpha-beta pruning:

def alpha_beta(position, depth, alpha, beta, maximizing_player):

if depth == 0 or game_over(position):

return evaluate(position)

if maximizing_player:

max_eval = float('-inf')

for move in get_possible_moves(position):

eval = alpha_beta(make_move(position, move), depth - 1, alpha, beta, False)

max_eval = max(max_eval, eval)

alpha = max(alpha, eval)

if beta <= alpha:

break

return max_eval

else:

min_eval = float('inf')

for move in get_possible_moves(position):

eval = alpha_beta(make_move(position, move), depth - 1, alpha, beta, True)

min_eval = min(min_eval, eval)

beta = min(beta, eval)

if beta <= alpha:

break

return min_evalHeuristic Evaluation

In chess, heuristic evaluation is used to assess the strength of a position when it’s not feasible to calculate all possible outcomes. This concept is widely applicable in various algorithmic problems where approximations are necessary due to computational limitations.

A simple heuristic function for chess might look like this:

def evaluate_position(board):

score = 0

piece_values = {

'P': 1, 'N': 3, 'B': 3, 'R': 5, 'Q': 9, 'K': 0,

'p': -1, 'n': -3, 'b': -3, 'r': -5, 'q': -9, 'k': 0

}

for row in board:

for piece in row:

if piece in piece_values:

score += piece_values[piece]

return score2. Go: Mastering Complexity and Pattern Recognition

Go, an ancient board game originating from China, is renowned for its complexity and depth. It presents unique challenges that have pushed the boundaries of artificial intelligence and algorithm design.

Monte Carlo Tree Search (MCTS)

The Monte Carlo Tree Search algorithm gained prominence with its successful application in Go-playing programs. MCTS is particularly useful in games with high branching factors where traditional minimax approaches become impractical.

Here’s a simplified implementation of MCTS:

import random

import math

class Node:

def __init__(self, state, parent=None):

self.state = state

self.parent = parent

self.children = []

self.visits = 0

self.value = 0

def mcts(root, iterations):

for _ in range(iterations):

node = select(root)

child = expand(node)

result = simulate(child.state)

backpropagate(child, result)

return best_child(root)

def select(node):

while node.children:

if not all(child.visits for child in node.children):

return expand(node)

node = best_uct(node)

return node

def expand(node):

if node.state.is_terminal():

return node

unvisited = [move for move in node.state.get_legal_moves() if move not in [child.state.last_move for child in node.children]]

if not unvisited:

return node

new_state = node.state.make_move(random.choice(unvisited))

child = Node(new_state, parent=node)

node.children.append(child)

return child

def simulate(state):

while not state.is_terminal():

state = state.make_move(random.choice(state.get_legal_moves()))

return state.get_result()

def backpropagate(node, result):

while node:

node.visits += 1

node.value += result

node = node.parent

def best_uct(node):

return max(node.children, key=lambda c: c.value / c.visits + math.sqrt(2 * math.log(node.visits) / c.visits))

def best_child(node):

return max(node.children, key=lambda c: c.visits)Pattern Recognition

Go relies heavily on pattern recognition, a concept that’s crucial in many areas of computer science, including machine learning and computer vision. In Go, recognizing common shapes and formations is key to strategic play.

Here’s a simple example of how you might implement basic pattern recognition in Go:

def recognize_pattern(board, pattern):

for i in range(len(board) - len(pattern) + 1):

for j in range(len(board[0]) - len(pattern[0]) + 1):

if match_pattern(board, pattern, i, j):

return True

return False

def match_pattern(board, pattern, start_row, start_col):

for i in range(len(pattern)):

for j in range(len(pattern[0])):

if pattern[i][j] != '*' and board[start_row + i][start_col + j] != pattern[i][j]:

return False

return True

# Example usage

board = [

['B', 'W', 'B', 'W'],

['W', 'B', 'W', 'B'],

['B', 'W', 'B', 'W'],

['W', 'B', 'W', 'B']

]

pattern = [

['B', 'W'],

['W', 'B']

]

print(recognize_pattern(board, pattern)) # Output: True3. Checkers: Understanding Tree Structures and Decision Making

Checkers, also known as Draughts in some parts of the world, is a classic board game that offers valuable insights into tree structures and decision-making algorithms.

Game Tree

The game tree in checkers represents all possible moves and their outcomes. Understanding and navigating this tree structure is crucial for developing strong checkers algorithms and has applications in various other algorithmic problems.

Here’s a simple representation of a game tree node in Python:

class CheckersNode:

def __init__(self, board, player, move=None):

self.board = board

self.player = player

self.move = move

self.children = []

self.value = None

def add_child(self, child):

self.children.append(child)

def is_terminal(self):

# Check if the game is over

return len(self.get_legal_moves()) == 0

def get_legal_moves(self):

# Return a list of legal moves

# Implementation depends on the specific rules of checkers

pass

def make_move(self, move):

# Apply the move and return a new board state

passIterative Deepening

Iterative deepening is a strategy used in game tree search algorithms. It combines the benefits of depth-first search’s space efficiency and breadth-first search’s completeness.

Here’s an example of how iterative deepening might be implemented for checkers:

def iterative_deepening(board, max_depth):

best_move = None

for depth in range(1, max_depth + 1):

move, value = minimax(board, depth, True)

if value == float('inf'): # Found a winning move

return move

best_move = move

return best_move

def minimax(node, depth, maximizing_player):

if depth == 0 or node.is_terminal():

return None, evaluate(node)

best_move = None

if maximizing_player:

best_value = float('-inf')

for move in node.get_legal_moves():

child = CheckersNode(node.make_move(move), not maximizing_player, move)

_, value = minimax(child, depth - 1, False)

if value > best_value:

best_value = value

best_move = move

else:

best_value = float('inf')

for move in node.get_legal_moves():

child = CheckersNode(node.make_move(move), not maximizing_player, move)

_, value = minimax(child, depth - 1, True)

if value < best_value:

best_value = value

best_move = move

return best_move, best_value

def evaluate(node):

# Implement board evaluation logic

pass4. Monopoly: Probability and Resource Management Algorithms

Monopoly, the classic property trading game, offers excellent opportunities to explore probability and resource management algorithms.

Probability Calculation

Understanding the probabilities of landing on different properties is crucial in Monopoly. This concept has wide applications in various algorithms dealing with uncertainty and risk assessment.

Here’s a simple example of calculating the probability of landing on a specific property after rolling two dice:

import random

def roll_dice():

return random.randint(1, 6) + random.randint(1, 6)

def simulate_monopoly(num_simulations, target_property):

landings = 0

for _ in range(num_simulations):

position = 0

for _ in range(10): # Simulate 10 turns

position = (position + roll_dice()) % 40

if position == target_property:

landings += 1

break

return landings / num_simulations

# Example: Probability of landing on Boardwalk (position 39) in 10 turns

print(simulate_monopoly(100000, 39))Resource Management

Monopoly is fundamentally a game of resource management. Players must decide how to allocate their limited money across properties, houses, and hotels. This mirrors many real-world algorithmic problems in finance, logistics, and operations research.

Here’s a simple algorithm for deciding whether to buy a property in Monopoly:

def should_buy_property(player_cash, property_cost, owned_properties):

# Don't buy if it would leave you with less than 500 cash

if player_cash - property_cost < 500:

return False

# Buy if you have less than 3 properties

if len(owned_properties) < 3:

return True

# Buy if it completes a set

if completes_set(property_cost, owned_properties):

return True

# Otherwise, buy with 50% probability

return random.random() < 0.5

def completes_set(property, owned_properties):

# Implementation depends on the specific rules of Monopoly

pass5. Settlers of Catan: Resource Optimization and Negotiation Algorithms

Settlers of Catan, a modern classic board game, presents complex challenges in resource optimization and negotiation, making it an excellent platform for exploring advanced algorithmic concepts.

Resource Optimization

In Catan, players must optimize their resource collection and usage. This mirrors many real-world optimization problems in fields like supply chain management and operations research.

Here’s a simple resource optimization algorithm for Catan:

def optimize_build_order(resources, costs, values):

n = len(costs)

dp = [[0 for _ in range(resources + 1)] for _ in range(n + 1)]

for i in range(1, n + 1):

for w in range(resources + 1):

if costs[i-1] <= w:

dp[i][w] = max(values[i-1] + dp[i-1][w-costs[i-1]], dp[i-1][w])

else:

dp[i][w] = dp[i-1][w]

return dp[n][resources]

# Example usage

resources = 10

costs = [3, 4, 5, 2] # Cost of road, settlement, city, development card

values = [1, 2, 3, 1] # Victory points for each

print(optimize_build_order(resources, costs, values))Negotiation Algorithms

Catan involves player-to-player trading, which can be modeled using negotiation algorithms. These algorithms have applications in fields like economics, artificial intelligence, and multi-agent systems.

Here’s a simple negotiation algorithm for Catan trading:

def propose_trade(my_resources, their_resources, my_needs, their_needs):

offer = {}

request = {}

for resource in my_needs:

if their_resources[resource] > 0:

request[resource] = min(their_resources[resource], my_needs[resource])

for resource in their_needs:

if my_resources[resource] > my_needs.get(resource, 0):

offer[resource] = min(my_resources[resource] - my_needs.get(resource, 0), their_needs[resource])

if len(offer) > 0 and len(request) > 0:

return offer, request

else:

return None, None

# Example usage

my_resources = {'wood': 3, 'brick': 2, 'ore': 1, 'grain': 0, 'wool': 4}

their_resources = {'wood': 1, 'brick': 3, 'ore': 2, 'grain': 2, 'wool': 0}

my_needs = {'grain': 2}

their_needs = {'wool': 2}

offer, request = propose_trade(my_resources, their_resources, my_needs, their_needs)

print(f"Offer: {offer}")

print(f"Request: {request}")6. Ticket to Ride: Graph Theory and Pathfinding Algorithms

Ticket to Ride, a popular board game about building train routes, is an excellent platform for exploring graph theory and pathfinding algorithms.

Graph Representation

The game board in Ticket to Ride can be represented as a graph, where cities are nodes and train routes are edges. This representation is fundamental to many computer science problems.

Here’s a simple way to represent the Ticket to Ride graph:

class City:

def __init__(self, name):

self.name = name

self.connections = {}

def add_connection(self, other_city, distance):

self.connections[other_city] = distance

class TicketToRideMap:

def __init__(self):

self.cities = {}

def add_city(self, name):

if name not in self.cities:

self.cities[name] = City(name)

def add_route(self, city1, city2, distance):

self.add_city(city1)

self.add_city(city2)

self.cities[city1].add_connection(city2, distance)

self.cities[city2].add_connection(city1, distance)

# Example usage

game_map = TicketToRideMap()

game_map.add_route("New York", "Washington", 2)

game_map.add_route("New York", "Pittsburgh", 2)

game_map.add_route("Washington", "Pittsburgh", 2)Dijkstra’s Algorithm

Dijkstra’s algorithm is a popular pathfinding algorithm that can be used to find the shortest route between two cities in Ticket to Ride. This algorithm has wide applications in network routing, GPS navigation, and more.

Here’s an implementation of Dijkstra’s algorithm for Ticket to Ride:

import heapq

def dijkstra(graph, start, end):

distances = {city: float('infinity') for city in graph.cities}

distances[start] = 0

pq = [(0, start)]

previous = {city: None for city in graph.cities}

while pq:

current_distance, current_city = heapq.heappop(pq)

if current_city == end:

path = []

while current_city:

path.append(current_city)

current_city = previous[current_city]

return path[::-1], current_distance

if current_distance > distances[current_city]:

continue

for neighbor, weight in graph.cities[current_city].connections.items():

distance = current_distance + weight

if distance < distances[neighbor]:

distances[neighbor] = distance

previous[neighbor] = current_city

heapq.heappush(pq, (distance, neighbor))

return None, float('infinity')

# Example usage

path, distance = dijkstra(game_map, "New York", "Pittsburgh")

print(f"Shortest path: {' -> '.join(path)}")

print(f"Total distance: {distance}")7. Pandemic: Collaborative Problem Solving and Optimization

Pandemic, a cooperative board game where players work together to save the world from disease outbreaks, presents unique challenges in collaborative problem-solving and optimization.

Multi-Agent Planning

In Pandemic, players must coordinate their actions to achieve a common goal. This mirrors multi-agent planning problems in artificial intelligence and robotics.

Here’s a simplified multi-agent planning algorithm for Pandemic:

class PandemicState:

def __init__(self, players, diseases, cures):

self.players = players

self.diseases = diseases

self.cures = cures

def collaborative_plan(state, depth):

if depth == 0 or is_game_over(state):

return evaluate(state), []

best_score = float('-inf')

best_plan = []

for player in state.players:

for action in get_possible_actions(player, state):

new_state = apply_action(state, player, action)

score, sub_plan = collaborative_plan(new_state, depth - 1)

if score > best_score:

best_score = score

best_plan = [action] + sub_plan

return best_score, best_plan

def is_game_over(state):

# Check if the game is won or lost

pass

def evaluate(state):

# Evaluate the current game state

pass

def get_possible_actions(player, state):

# Return list of possible actions for the player

pass

def apply_action(state, player, action):

# Apply the action and return new state

pass

# Example usage

initial_state = PandemicState(players=['Medic', 'Scientist'], diseases={'Blue': 3, 'Yellow': 2}, cures=[])

score, plan = collaborative_plan(initial_state, depth=3)

print(f"Plan score: {score}")

print(f"Best plan: {plan}")Resource Allocation

Efficient resource allocation is crucial in Pandemic. Players must decide how to use limited actions and cards to best combat the diseases. This problem is analogous to many resource allocation problems in computer science and operations research.

Here’s a simple resource allocation algorithm for Pandemic:

def allocate_resources(players, cities, diseases):

allocations = {player: [] for player in players}

# Sort cities by disease level

sorted_cities = sorted(cities, key=lambda c: sum(diseases[c].values()), reverse=True)

for city in sorted_cities:

# Find the nearest player

nearest_player = min(players, key=lambda p: distance(p.location, city))

# Allocate the player to the city

allocations[nearest_player].append(city)

# If the player has been allocated 2 cities, remove them from consideration

if len(allocations[nearest_player]) == 2:

players.remove(nearest_player)

if not players:

break

return allocations

def distance(location1, location2):

# Calculate distance between two locations

pass

# Example usage

players = [Player('Medic', 'New York'), Player('Scientist', 'Atlanta')]

cities = ['New York', 'Atlanta', 'Chicago', 'San Francisco']

diseases = {

'New York': {'Blue': 2},

'Atlanta': {'Blue': 1, 'Yellow': 1},

'Chicago': {'Blue': 3},

'San Francisco': {'Blue': 1, 'Red': 2}

}

allocations = allocate_resources(players, cities, diseases)

for player, cities in allocations.items():

print(f"{player.role} allocated to: {', '.join(cities)}")8. Scrabble: String Manipulation and Dictionary Algorithms

Scrabble, the classic word game, is an excellent platform for exploring string manipulation and dictionary algorithms, which are fundamental in many areas of computer science.

Trie Data Structure

A Trie (prefix tree) is an efficient data structure for storing and searching strings. It’s particularly useful for implementing a Scrabble dictionary.

Here’s a simple implementation of a Trie for Scrabble:

class TrieNode:

def __init__(self):

self.children = {}

self.is_end = False

class Trie:

def __init__(self):

self.root = TrieNode()

def insert(self, word):

node = self.root

for char in word:

if char not in node.children:

node.children[char] = TrieNode()

node = node.children[char]

node.is_end = True

def search(self, word):

node = self.root

for char in word:

if char not in node.children:

return False

node = node.children[char]

return node.is_end

def starts_with(self, prefix):

node = self.root

for char in prefix:

if char not in node.children:

return False

node = node.children[char]

return True

# Example usage

scrabble_dict = Trie()

words = ["CAT", "DOG", "TIGER", "LION"]

for word in words:

scrabble_dict.insert(word)

print(scrabble_dict.search("CAT")) # True

print(scrabble_dict.search("ELEPHANT")) # False

print(scrabble_dict.starts_with("TI")) # TrueAnagram Generation

Generating anagrams is a key skill in Scrabble. This problem involves permutations and combinations, which are important concepts in algorithmic thinking.

Here’s an algorithm to generate all possible words from a given set of letters:

from itertools import permutations

def generate_words(letters, scrabble_dict):

words = set()

for r in range(2, len(letters) + 1):

for perm in permutations(letters, r):

word = ''.join(perm)

if scrabble_dict.search(word):

words.add(word)

return list(words)

# Example usage

letters = "RSTLNE"

possible_words = generate_words(letters, scrabble_dict)

print(f"Possible words: {', '.join(possible_words)}")9. Risk: Probability and Game Theory Algorithms

Risk, the game of global domination, provides an excellent platform for exploring probability and game theory algorithms. These concepts are crucial in many areas of computer science, particularly in artificial intelligence and decision-making systems.

Battle Outcome Probability

In Risk, battles are resolved using dice rolls. Understanding the probability of different outcomes is crucial for strategic play. This concept has applications in many fields, including statistics and machine learning.

Here’s a function to calculate the probability of winning a battle in Risk:

import math

def battle_probability(attackers, defenders):

total_outcomes = 6**attackers * 6**defenders

favorable_outcomes = 0

for attack_roll in range(1, 7):

for defend_roll in range(1, 7):

if attack_roll > defend_roll:

favorable_outcomes += 1

probability = favorable_outcomes / total_outcomes

return probability

# Example usage

attackers = 3

defenders = 2

win_prob = battle_probability(attackers, defenders)

print(f"Probability of attacker winning: {win_prob:.2f}")Territory Evaluation

Evaluating the strategic value of territories is a key aspect of Risk. This involves considering factors like connectivity, continent bonuses, and threat levels. Such evaluation algorithms have applications in various fields, including logistics and network analysis.

Here’s a simple territory evaluation function for Risk:

def evaluate_territory(territory, game_state):

score = 0

# Base value

score += 1

# Connectivity value

score += len(game_state.get_adjacent_territories(territory))

# Continent bonus

if territory in game_state.get_continent_territories(game_state.get_continent(territory)):

score += game_state.get_continent_bonus(game_state.get_continent(territory))

# Threat level (negative score)

for adjacent in game_state.get_adjacent_territories(territory):

if game_state.get_owner(adjacent) != game_state.get_owner(territory):

score -= game_state.get_armies(adjacent)

return score

class GameState:

def get_adjacent_territories(self, territory):

# Return list of adjacent territories

pass

def get_continent(self, territory):

# Return the continent the territory belongs to

pass

def get_continent_territories(self, continent):

# Return list of territories in the continent

pass

def get_continent_bonus(self, continent):

# Return the bonus for controlling the continent

pass

def get_owner(self, territory):

# Return the player who owns the territory

pass

def get_armies(self, territory):

# Return the number of armies in the territory

pass

# Example usage

game_state = GameState()

territory = "Eastern United States"

value = evaluate_territory(territory, game_state)

print(f"Strategic value of {territory}: {value}")10. Conclusion: From Board to Code

As we’ve explored in this comprehensive guide, board games offer a rich and engaging way to understand and practice fundamental algorithmic concepts. From the classic strategies of chess to the modern complexities of Settlers of Catan, each game presents unique challenges that mirror real-world programming problems.

Let’s recap some of the key algorithmic concepts we’ve covered:

- Chess: Minimax algorithm, alpha-beta pruning, and heuristic evaluation

- Go: Monte Carlo Tree Search and pattern recognition

- Checkers: Game tree structures and iterative deepening

- Monopoly: Probability calculation and resource management

- Settlers of Catan: Resource optimization and negotiation algorithms

- Ticket to Ride: Graph theory and Dijkstra’s algorithm

- Pandemic: Multi-agent planning and resource allocation

- Scrabble: T