Convolutional Neural Networks: Revolutionizing Image Processing and Beyond

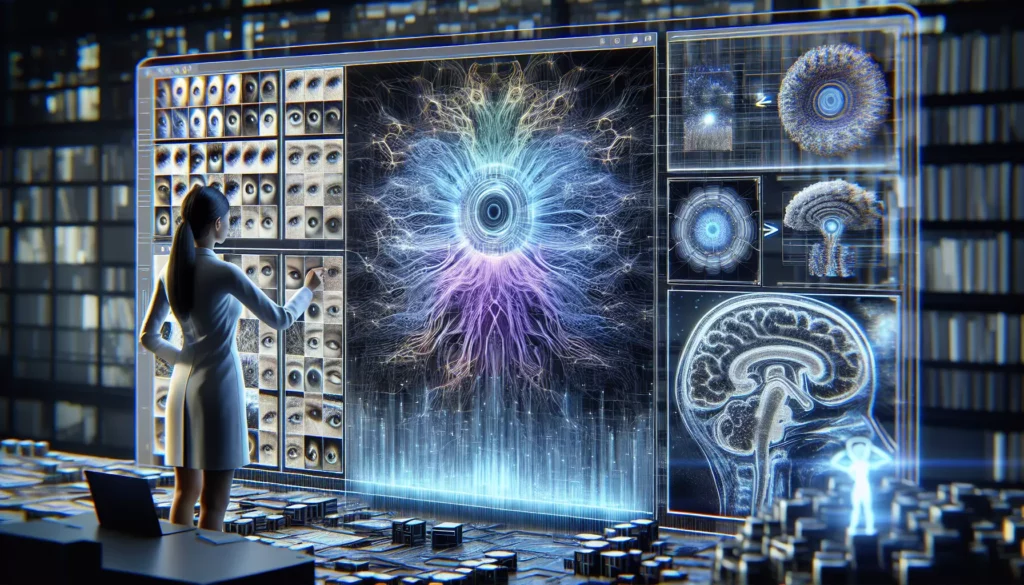

In the rapidly evolving field of artificial intelligence and machine learning, Convolutional Neural Networks (CNNs) have emerged as a groundbreaking technology, particularly in the domain of image processing and computer vision. These powerful deep learning models have revolutionized how machines interpret and analyze visual data, leading to significant advancements in various applications, from facial recognition to autonomous vehicles. In this comprehensive guide, we’ll delve into the intricacies of CNNs, exploring their structure, functionality, and the wide-ranging impact they’ve had on modern technology.

What is a Convolutional Neural Network?

A Convolutional Neural Network, often abbreviated as CNN or ConvNet, is a specialized type of deep learning algorithm designed to process data with a grid-like topology. While CNNs are most commonly associated with image analysis, they can also be applied to other types of data such as audio signals or time series data.

The term “convolutional” in CNNs refers to the mathematical operation of convolution, which is at the heart of how these networks process information. This operation allows the network to capture spatial and temporal dependencies in an image through the application of relevant filters.

Key Components of a CNN

To understand how CNNs work, it’s essential to familiarize ourselves with their main components:

- Convolutional Layers: These layers apply a set of learnable filters to the input data, creating feature maps that highlight important aspects of the image.

- Pooling Layers: These layers reduce the spatial dimensions of the feature maps, helping to manage computational complexity and improve robustness.

- Activation Functions: Non-linear functions like ReLU (Rectified Linear Unit) are applied to introduce non-linearity into the model.

- Fully Connected Layers: These layers connect every neuron in one layer to every neuron in another layer, typically used in the final stages of the network for classification tasks.

The Architecture of Convolutional Neural Networks

Let’s dive deeper into the architecture of CNNs and explore how each component contributes to the network’s ability to process and understand images.

1. Convolutional Layers

The convolutional layer is the core building block of a CNN. It’s responsible for detecting features in the input image, such as edges, textures, and shapes. The process of convolution involves sliding a small window (filter or kernel) across the input image and performing element-wise multiplication followed by summation.

Here’s a simplified example of how convolution works:

Input Image: Filter:

1 1 1 0 0 1 0

0 1 1 1 0 0 1

0 0 1 1 1 1 0

0 0 1 1 0

0 1 1 0 0

Result of convolution:

4 3 4

2 4 3

2 3 4In practice, CNNs use multiple filters in each convolutional layer, each capable of detecting different features. The output of each filter is called a feature map.

2. Pooling Layers

Pooling layers, also known as downsampling layers, are typically inserted between successive convolutional layers. Their primary function is to progressively reduce the spatial size of the representation, which helps to:

- Reduce the number of parameters and computational load in the network

- Control overfitting

- Make the network invariant to small translations in the input

The most common type of pooling operation is max pooling, which outputs the maximum value within a rectangular neighborhood. Other types include average pooling and L2-norm pooling.

3. Activation Functions

Activation functions introduce non-linearity into the network, allowing it to learn complex patterns. The most commonly used activation function in CNNs is the Rectified Linear Unit (ReLU), defined as:

f(x) = max(0, x)ReLU has several advantages:

- It’s computationally efficient

- It helps mitigate the vanishing gradient problem

- It introduces sparsity in the network, which can be beneficial for feature learning

4. Fully Connected Layers

The fully connected layers are typically found at the end of the network. They take the high-level features learned by the convolutional and pooling layers and use them to classify the input image into various categories.

In a fully connected layer, every neuron is connected to every neuron in the previous and subsequent layer. This allows the network to combine all the features learned by previous layers to make the final classification decision.

Training a Convolutional Neural Network

Training a CNN involves adjusting the network’s parameters (weights and biases) to minimize the difference between the network’s predictions and the true labels of the training data. This process typically uses an optimization algorithm called backpropagation in conjunction with gradient descent.

The Training Process

- Forward Pass: The input image is passed through the network, generating a prediction.

- Loss Calculation: The difference between the prediction and the true label is calculated using a loss function.

- Backward Pass: The gradient of the loss with respect to each parameter is computed using backpropagation.

- Parameter Update: The parameters are updated in the direction that minimizes the loss, typically using an optimization algorithm like Stochastic Gradient Descent (SGD).

This process is repeated for many iterations over the entire training dataset, gradually improving the network’s performance.

Challenges in Training CNNs

While CNNs have proven to be incredibly powerful, training them effectively can be challenging. Some common issues include:

- Overfitting: When the model learns the training data too well, including its noise and peculiarities, it may not generalize well to new, unseen data.

- Vanishing/Exploding Gradients: In deep networks, gradients can become very small (vanishing) or very large (exploding) during backpropagation, making training difficult.

- Computational Complexity: Training large CNNs can be computationally intensive, requiring significant hardware resources and time.

To address these challenges, researchers have developed various techniques such as data augmentation, transfer learning, batch normalization, and the use of specialized optimizers.

Applications of Convolutional Neural Networks

The power and versatility of CNNs have led to their adoption in a wide range of applications across various industries. Let’s explore some of the most impactful use cases:

1. Computer Vision

Computer vision is perhaps the most well-known application of CNNs. Some specific tasks include:

- Image Classification: Categorizing images into predefined classes (e.g., identifying animal species in wildlife photos).

- Object Detection: Identifying and locating multiple objects within an image (e.g., detecting pedestrians and vehicles in autonomous driving systems).

- Facial Recognition: Identifying or verifying a person from their facial features (e.g., unlocking smartphones, security systems).

- Image Segmentation: Partitioning an image into multiple segments or objects (e.g., identifying different tissues in medical imaging).

2. Natural Language Processing

While Recurrent Neural Networks (RNNs) are more commonly associated with text processing, CNNs have also found applications in NLP tasks such as:

- Text Classification

- Sentiment Analysis

- Machine Translation

3. Speech Recognition

CNNs can be applied to spectrograms of audio signals for tasks like:

- Speaker Identification

- Emotion Recognition from Speech

- Speech-to-Text Conversion

4. Medical Imaging

In the healthcare industry, CNNs have revolutionized medical image analysis:

- Detecting and classifying tumors in MRI scans

- Identifying abnormalities in X-rays and CT scans

- Assisting in early diagnosis of diseases like diabetic retinopathy

5. Autonomous Vehicles

CNNs play a crucial role in the perception systems of self-driving cars:

- Detecting and classifying objects on the road (vehicles, pedestrians, traffic signs)

- Lane detection and tracking

- Estimating the distance to objects

Advanced CNN Architectures

As research in deep learning has progressed, several advanced CNN architectures have been developed to tackle more complex tasks and improve performance. Some notable examples include:

1. VGGNet

Developed by the Visual Geometry Group at Oxford, VGGNet is known for its simplicity and depth. It uses small 3×3 convolutional filters throughout the network, demonstrating that a deep network with small filters can outperform shallower networks with larger filters.

2. ResNet (Residual Networks)

ResNet introduced the concept of skip connections or shortcut connections, allowing the network to learn residual functions. This innovation made it possible to train much deeper networks (up to 152 layers) without suffering from the vanishing gradient problem.

3. Inception (GoogLeNet)

The Inception architecture, developed by Google, uses inception modules that apply multiple filter sizes to the same input. This allows the network to capture features at different scales simultaneously, improving efficiency and performance.

4. DenseNet

DenseNet connects each layer to every other layer in a feed-forward fashion. This dense connectivity pattern encourages feature reuse and substantially reduces the number of parameters, making the network more efficient.

5. U-Net

U-Net is particularly popular in biomedical image segmentation. It has a U-shaped architecture with a contracting path to capture context and a symmetric expanding path that enables precise localization.

The Future of Convolutional Neural Networks

As we look to the future, several exciting trends and developments are shaping the evolution of CNNs:

1. Efficient Architectures

There’s a growing focus on developing CNN architectures that are not only accurate but also computationally efficient. This is particularly important for deploying CNNs on edge devices with limited computational resources.

2. Self-Supervised Learning

Self-supervised learning techniques are being developed to leverage large amounts of unlabeled data, reducing the need for extensive labeled datasets. This could significantly expand the applicability of CNNs in domains where labeled data is scarce.

3. Explainable AI

As CNNs are increasingly used in critical applications, there’s a growing need for interpretable models. Researchers are working on techniques to visualize and explain the decision-making process of CNNs, making them more transparent and trustworthy.

4. 3D and Video CNNs

While 2D CNNs have been highly successful for image analysis, there’s increasing interest in developing 3D CNNs for volumetric data (like 3D medical scans) and video analysis.

5. Integration with Other AI Techniques

CNNs are being combined with other AI techniques like reinforcement learning and generative models to create more powerful and versatile systems.

Conclusion

Convolutional Neural Networks have undoubtedly transformed the landscape of artificial intelligence, particularly in the realm of computer vision. Their ability to automatically learn hierarchical representations of visual data has led to breakthroughs in numerous applications, from facial recognition systems to medical diagnosis tools.

As we’ve explored in this article, the power of CNNs lies in their specialized architecture, which mimics the organization of the animal visual cortex. By applying convolutional filters and pooling operations, CNNs can efficiently process large amounts of visual data, extracting relevant features and ignoring irrelevant details.

The impact of CNNs extends far beyond image processing. Their principles have been successfully applied to other domains such as natural language processing and speech recognition, demonstrating their versatility and potential for solving complex pattern recognition problems.

As research in this field continues to advance, we can expect to see even more powerful and efficient CNN architectures, as well as novel applications in emerging fields. The integration of CNNs with other AI techniques, such as reinforcement learning and generative models, opens up exciting possibilities for creating more intelligent and capable systems.

However, as with any powerful technology, it’s crucial to consider the ethical implications of widespread CNN deployment, particularly in sensitive applications like facial recognition and medical diagnosis. Ensuring the transparency, fairness, and reliability of these systems will be a key challenge as they become increasingly integrated into our daily lives.

In conclusion, Convolutional Neural Networks represent a significant leap forward in our ability to create machines that can understand and interpret visual information. As we continue to refine and expand upon this technology, we’re moving closer to creating artificial intelligence systems that can perceive and interact with the world in ways that were once the realm of science fiction. The future of CNNs is bright, and their continued development promises to unlock new frontiers in artificial intelligence and its applications across diverse fields.