MongoDB Aggregation Pipeline: Powerful Data Processing for Modern Applications

In today’s data-driven world, the ability to efficiently process and analyze large volumes of information is crucial for businesses and developers alike. MongoDB, a popular NoSQL database, offers a powerful tool for this purpose: the Aggregation Pipeline. This feature allows users to perform complex data transformations and analysis operations with ease, making it an essential skill for anyone working with MongoDB.

In this comprehensive guide, we’ll dive deep into the MongoDB Aggregation Pipeline, exploring its concepts, stages, and practical applications. Whether you’re a beginner looking to enhance your database skills or an experienced developer aiming to optimize your data processing workflows, this article will provide valuable insights and hands-on examples to help you master the Aggregation Pipeline.

Table of Contents

- Introduction to MongoDB Aggregation Pipeline

- Understanding Pipeline Stages

- Common Aggregation Stages

- Advanced Features and Optimizations

- Real-World Examples and Use Cases

- Best Practices and Performance Considerations

- Comparison with Other Database Solutions

- Conclusion

1. Introduction to MongoDB Aggregation Pipeline

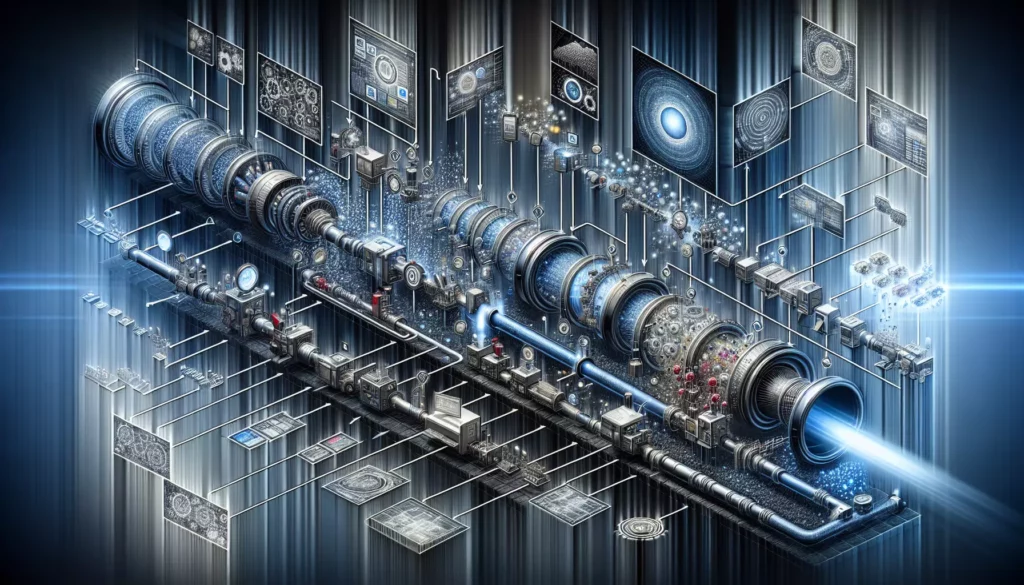

The MongoDB Aggregation Pipeline is a framework for data aggregation modeled on the concept of data processing pipelines. It allows you to process data from a collection through a series of stages, where each stage transforms the documents as they pass through the pipeline. This powerful feature enables you to perform complex operations on your data, such as filtering, grouping, sorting, and calculating aggregate values.

Key benefits of using the Aggregation Pipeline include:

- Efficient data processing: Operations are optimized for performance and can leverage indexes.

- Flexibility: You can combine multiple stages to create complex data transformations.

- Readability: The pipeline structure makes it easy to understand and maintain your data processing logic.

- Scalability: Aggregations can be distributed across sharded clusters for improved performance.

To use the Aggregation Pipeline, you typically call the aggregate() method on a collection, passing an array of pipeline stages. Here’s a basic example:

db.collection.aggregate([

{ $match: { status: "active" } },

{ $group: { _id: "$category", count: { $sum: 1 } } },

{ $sort: { count: -1 } }

])

This example demonstrates a simple pipeline that filters active documents, groups them by category, and sorts the results by count in descending order.

2. Understanding Pipeline Stages

Pipeline stages are the building blocks of the Aggregation Pipeline. Each stage performs a specific operation on the input documents and passes the results to the next stage. Understanding how these stages work and interact is crucial for creating effective aggregations.

Here are some important concepts related to pipeline stages:

- Order matters: The sequence of stages in the pipeline affects the final output.

- Document transformation: Each stage can modify, remove, or add fields to the documents.

- Memory constraints: By default, aggregation operations have a 100 MB memory limit.

- Output control: You can limit the number of documents in the output using stages like

$limit.

Let’s examine a more complex pipeline to illustrate these concepts:

db.sales.aggregate([

{ $match: { date: { $gte: new Date("2023-01-01"), $lt: new Date("2024-01-01") } } },

{ $group: {

_id: { $month: "$date" },

totalSales: { $sum: "$amount" },

avgSale: { $avg: "$amount" }

} },

{ $sort: { totalSales: -1 } },

{ $project: {

month: "$_id",

totalSales: 1,

avgSale: { $round: ["$avgSale", 2] },

_id: 0

} },

{ $limit: 5 }

])

This pipeline performs the following operations:

- Filters sales documents for the year 2023

- Groups the results by month, calculating total and average sales

- Sorts the groups by total sales in descending order

- Reshapes the output documents, rounding the average sale to two decimal places

- Limits the output to the top 5 months

3. Common Aggregation Stages

While MongoDB offers a wide array of aggregation stages, some are more frequently used than others. Let’s explore some of the most common stages and their use cases:

$match

The $match stage filters the documents to pass only those that match the specified condition(s). It’s often used early in the pipeline to reduce the number of documents processed by subsequent stages.

{ $match: { status: "completed", total: { $gt: 100 } } }

$group

The $group stage groups input documents by a specified identifier expression and applies accumulator expressions to each group. This is useful for performing calculations on grouped data.

{ $group: {

_id: "$category",

count: { $sum: 1 },

avgPrice: { $avg: "$price" }

} }

$sort

The $sort stage sorts all input documents and returns them to the pipeline in sorted order. You can sort on multiple fields and specify ascending (1) or descending (-1) order.

{ $sort: { age: -1, name: 1 } }

$project

The $project stage reshapes documents in the stream, including, excluding, or renaming fields. It’s also used to create computed fields or apply expressions to existing fields.

{ $project: {

name: 1,

age: 1,

birthYear: { $subtract: [2023, "$age"] },

_id: 0

} }

$unwind

The $unwind stage deconstructs an array field from the input documents to output a document for each element. This is particularly useful when you need to perform operations on array elements individually.

{ $unwind: "$tags" }

$lookup

The $lookup stage performs a left outer join to another collection in the same database. This allows you to combine data from multiple collections in a single aggregation pipeline.

{ $lookup: {

from: "orders",

localField: "_id",

foreignField: "customerId",

as: "customerOrders"

} }

Understanding these common stages and how to combine them effectively is crucial for creating powerful aggregations that can handle a wide range of data processing tasks.

4. Advanced Features and Optimizations

As you become more comfortable with the basics of the Aggregation Pipeline, you can start exploring its advanced features and optimizations to tackle more complex data processing challenges and improve performance.

Aggregation Variables

MongoDB provides several variables that you can use within your pipeline stages to access metadata about the current document or operation:

$$ROOT: Refers to the root document, i.e., the top-level document currently being processed.$$CURRENT: Refers to the document at the current stage of the pipeline.$$DESCEND: Used in$graphLookupto access the document at the previous recursion depth.

Example using $$ROOT:

{ $project: {

originalDocument: "$$ROOT",

newField: { $toUpper: "$name" }

} }

Aggregation Expressions

Aggregation expressions are powerful tools that allow you to perform complex calculations and transformations within your pipeline stages. Some advanced expression operators include:

$cond: Conditional operator for if-then-else logic$switch: Multi-way conditional operator$map: Applies an expression to each item in an array$reduce: Reduces an array to a single value$filter: Selects a subset of an array based on a condition

Example using $cond and $map:

{ $project: {

name: 1,

grade: {

$cond: {

if: { $gte: ["$score", 90] },

then: "A",

else: {

$cond: {

if: { $gte: ["$score", 80] },

then: "B",

else: "C"

}

}

}

},

adjustedScores: {

$map: {

input: "$scores",

as: "score",

in: { $add: ["$$score", 5] }

}

}

} }

Aggregation Accumulators

Accumulators are operators that maintain their state as documents progress through the pipeline. They are typically used with the $group stage but can also be used with other stages like $project and $addFields. Some useful accumulators include:

$sum: Calculates the sum of numeric values$avg: Calculates the average of numeric values$minand$max: Find the minimum and maximum values$push: Creates an array of all values$addToSet: Creates an array of unique values

Example using multiple accumulators:

{ $group: {

_id: "$category",

totalSales: { $sum: "$amount" },

avgSale: { $avg: "$amount" },

minSale: { $min: "$amount" },

maxSale: { $max: "$amount" },

uniqueCustomers: { $addToSet: "$customerId" }

} }

Performance Optimizations

To optimize the performance of your aggregation pipelines, consider the following techniques:

- Use indexes: Ensure that your

$matchand$sortstages can utilize indexes for faster execution. - Project early: Use

$projector$unsetstages early in the pipeline to reduce the amount of data processed in subsequent stages. - Limit memory usage: Use

$limitand$skipstages to control the number of documents processed. - Use

allowDiskUseoption: For large datasets that exceed the 100 MB memory limit, enable disk usage. - Avoid unnecessary stages: Combine operations where possible to reduce the number of pipeline stages.

Example of an optimized pipeline:

db.orders.aggregate([

{ $match: { status: "completed", date: { $gte: new Date("2023-01-01") } } },

{ $project: { customerId: 1, amount: 1, date: 1 } },

{ $group: {

_id: { $dateToString: { format: "%Y-%m", date: "$date" } },

totalSales: { $sum: "$amount" },

uniqueCustomers: { $addToSet: "$customerId" }

} },

{ $sort: { _id: 1 } }

], { allowDiskUse: true })

This pipeline uses indexing on the status and date fields, projects only necessary fields, and allows disk usage for processing large datasets.

5. Real-World Examples and Use Cases

To better understand the power and flexibility of the MongoDB Aggregation Pipeline, let’s explore some real-world examples and use cases. These scenarios demonstrate how aggregations can be applied to solve common data analysis and processing challenges.

Example 1: Sales Analysis Dashboard

Suppose you’re building a dashboard for an e-commerce platform that needs to display various sales metrics. Here’s an aggregation pipeline that could power such a dashboard:

db.sales.aggregate([

{ $match: {

date: { $gte: new Date("2023-01-01"), $lt: new Date("2024-01-01") }

} },

{ $group: {

_id: { $dateToString: { format: "%Y-%m", date: "$date" } },

totalRevenue: { $sum: "$amount" },

averageOrderValue: { $avg: "$amount" },

totalOrders: { $sum: 1 },

uniqueCustomers: { $addToSet: "$customerId" }

} },

{ $project: {

month: "$_id",

totalRevenue: 1,

averageOrderValue: { $round: ["$averageOrderValue", 2] },

totalOrders: 1,

uniqueCustomers: { $size: "$uniqueCustomers" },

_id: 0

} },

{ $sort: { month: 1 } }

])

This pipeline calculates monthly sales metrics, including total revenue, average order value, total number of orders, and unique customer count.

Example 2: Content Recommendation System

For a content platform that wants to recommend articles based on user reading history, you could use an aggregation pipeline like this:

db.userActivity.aggregate([

{ $match: { userId: "user123" } },

{ $unwind: "$readArticles" },

{ $group: {

_id: "$readArticles.category",

count: { $sum: 1 }

} },

{ $sort: { count: -1 } },

{ $limit: 3 },

{ $lookup: {

from: "articles",

let: { category: "$_id" },

pipeline: [

{ $match: {

$expr: {

$and: [

{ $eq: ["$category", "$$category"] },

{ $gt: ["$publishDate", new Date("2023-06-01")] }

]

}

} },

{ $sample: { size: 5 } }

],

as: "recommendedArticles"

} },

{ $unwind: "$recommendedArticles" },

{ $group: {

_id: null,

recommendations: { $push: "$recommendedArticles" }

} },

{ $project: {

_id: 0,

recommendations: { $slice: ["$recommendations", 10] }

} }

])

This pipeline finds the top 3 categories a user has read, then recommends up to 10 recent articles from those categories.

Example 3: Geospatial Analysis

For a location-based service that needs to find nearby points of interest, you could use a pipeline like this:

db.locations.aggregate([

{ $geoNear: {

near: { type: "Point", coordinates: [-73.9667, 40.78] },

distanceField: "distance",

maxDistance: 5000,

query: { type: "restaurant" },

spherical: true

} },

{ $group: {

_id: "$cuisine",

count: { $sum: 1 },

avgRating: { $avg: "$rating" },

restaurants: { $push: { name: "$name", distance: "$distance" } }

} },

{ $project: {

cuisine: "$_id",

count: 1,

avgRating: { $round: ["$avgRating", 1] },

nearestRestaurants: { $slice: ["$restaurants", 3] },

_id: 0

} },

{ $sort: { count: -1 } },

{ $limit: 5 }

])

This pipeline finds restaurants within 5 km of a given location, groups them by cuisine, and returns the top 5 cuisines with their counts, average ratings, and the 3 nearest restaurants for each cuisine.

Example 4: Time Series Data Analysis

For analyzing time series data, such as IoT sensor readings, you could use a pipeline like this:

db.sensorData.aggregate([

{ $match: {

deviceId: "device001",

timestamp: { $gte: new Date("2023-07-01"), $lt: new Date("2023-08-01") }

} },

{ $group: {

_id: {

$dateTrunc: {

date: "$timestamp",

unit: "hour",

binSize: 6

}

},

avgTemperature: { $avg: "$temperature" },

minTemperature: { $min: "$temperature" },

maxTemperature: { $max: "$temperature" },

readingsCount: { $sum: 1 }

} },

{ $project: {

timeBlock: { $dateToString: { format: "%Y-%m-%d %H:00", date: "$_id" } },

avgTemperature: { $round: ["$avgTemperature", 1] },

minTemperature: { $round: ["$minTemperature", 1] },

maxTemperature: { $round: ["$maxTemperature", 1] },

readingsCount: 1,

_id: 0

} },

{ $sort: { timeBlock: 1 } }

])

This pipeline analyzes temperature readings from a sensor, grouping them into 6-hour blocks and calculating average, minimum, and maximum temperatures for each block.

6. Best Practices and Performance Considerations

To ensure that your MongoDB Aggregation Pipelines are efficient, maintainable, and performant, consider the following best practices and performance considerations:

1. Optimize Early in the Pipeline

Place $match and $limit stages as early as possible in the pipeline to reduce the amount of data processed by subsequent stages. This can significantly improve performance, especially for large datasets.

db.collection.aggregate([

{ $match: { status: "active" } },

{ $limit: 1000 },

// Other stages...

])

2. Use Indexes Effectively

Ensure that your $match, $sort, and $group stages can utilize indexes. This is particularly important for large collections. Use the explain() method to verify index usage:

db.collection.aggregate([

{ $match: { status: "active", createdAt: { $gte: new Date("2023-01-01") } } },

// Other stages...

]).explain("executionStats")

3. Project Only Necessary Fields

Use $project or $unset stages to include only the fields you need for your aggregation. This reduces memory usage and improves performance.

db.collection.aggregate([

{ $match: { status: "active" } },

{ $project: { name: 1, email: 1, createdAt: 1 } },

// Other stages...

])

4. Use Aggregation Variables

Leverage aggregation variables like $$ROOT and $$CURRENT to reference the entire document or the current state of the document in the pipeline. This can make your pipelines more readable and efficient.

db.collection.aggregate([

{ $addFields: {

fullDocument: "$$ROOT",

currentState: "$$CURRENT"

} },

// Other stages...

])

5. Avoid Unnecessary $unwind Operations

While $unwind is useful for working with arrays, it can significantly increase the number of documents in your pipeline. Use it judiciously and consider alternatives when possible.

6. Use $lookup Wisely

The $lookup stage can be expensive, especially with large collections. Use it sparingly and ensure that the foreign collection is indexed on the join field.

7. Leverage the Aggregation Pipeline Builder

Use MongoDB Compass or other GUI tools that provide an Aggregation Pipeline Builder. These tools can help you construct and visualize your pipelines more easily.

8. Monitor and Optimize for Memory Usage

Be aware of the 100 MB memory limit for aggregation operations. Use the allowDiskUse option for operations that may exceed this limit, but be cautious as it can impact performance.

db.collection.aggregate([

// Stages...

], { allowDiskUse: true })

9. Use Aggregation Expressions Efficiently

When using complex expressions, try to simplify them or break them down into multiple stages if possible. This can improve readability and potentially performance.

10. Test with Representative Data

Always test your aggregation pipelines with a dataset that is representative of your production data in terms of size and complexity. This will help you identify performance issues early.

11. Use $merge for Large Result Sets

If your aggregation result is too large to fit in memory, consider using the $merge stage to output the results to a new or existing collection.

db.collection.aggregate([

// Stages...

{ $merge: {

into: "outputCollection",

on: "_id",

whenMatched: "replace",

whenNotMatched: "insert"

} }

])

12. Leverage Parallel Processing

For sharded clusters, ensure that your aggregation pipeline can benefit from parallel processing across shards. Stages like $match, $project, and $limit can be parallelized, while stages like $group and $sort typically require data from all shards.

7. Comparison with Other Database Solutions

While MongoDB’s Aggregation Pipeline is a powerful tool for data processing and analysis, it’s worth comparing it to other database solutions to understand its strengths and potential limitations:

MongoDB Aggregation vs. SQL

Similarities:

- Both allow complex data transformations and aggregations.

- Both support joining data from multiple sources (collections in MongoDB, tables in SQL).

- Both can perform filtering, grouping, and sorting operations.

Differences:

- MongoDB’s pipeline approach can be more intuitive for complex, multi-step transformations.

- SQL typically has better support for complex joins and set operations.

- MongoDB’s aggregation is more flexible with unstructured and semi-structured data.

- SQL has a more standardized syntax across different database systems.

MongoDB Aggregation vs. Hadoop/MapReduce

Similarities:

- Both can process large volumes of data.

- Both support complex data transformations and aggregations.

Differences:

- MongoDB Aggregation is typically easier to use and requires less setup.

- Hadoop/MapReduce is better suited for extremely large datasets that don’t fit on a single machine.

- MongoDB Aggregation is tightly integrated with the database, while Hadoop is a separate ecosystem.

MongoDB Aggregation vs. Apache Spark

Similarities:

- Both offer powerful data processing capabilities.

- Both support a wide range of data transformations and aggregations.

Differences:

- Spark is better suited for large-scale distributed data processing across multiple data sources.

- MongoDB Aggregation is more tightly integrated with the database and can be more efficient for MongoDB-specific operations.

- Spark offers more advanced machine learning and graph processing capabilities.

MongoDB Aggregation vs. Elasticsearch Aggregations

Similarities:

- Both offer powerful aggregation capabilities.

- Both are designed to work with semi-structured data.

Differences:

- Elasticsearch aggregations are particularly strong for real-time analytics and full-text search scenarios.

- MongoDB Aggregation offers more flexibility for complex data transformations.

- Elasticsearch is optimized for search and analytics workloads, while MongoDB is a more general-purpose database.

In summary, MongoDB’s Aggregation Pipeline shines in scenarios where you need to perform complex data transformations and aggregations on flexible, document-based data structures. It’s particularly well-suited for applications that are already using MongoDB as their primary database. However, for very large-scale distributed processing or specialized analytics tasks, other solutions like Hadoop, Spark, or dedicated analytics databases might be more appropriate.

8. Conclusion

The MongoDB Aggregation Pipeline is a powerful and flexible tool for data processing and analysis within the MongoDB ecosystem. Throughout this comprehensive guide, we’ve explored its core concepts, common stages, advanced features, and best practices. We’ve seen how it can be applied to solve real-world problems across various domains, from e-commerce analytics to content recommendation systems and IoT data analysis.

Key takeaways from this guide include:

- The pipeline approach allows for intuitive, step-by-step data transformations.

- A wide range of stages and operators provide flexibility for complex operations.

- Proper use of indexes and optimization techniques is crucial for performance.

- The Aggregation Pipeline can handle various data processing tasks, from simple grouping and filtering to complex geospatial and time series analysis.

- While powerful, it’s important to consider the strengths and limitations of the Aggregation Pipeline compared to other data processing solutions.

As you continue to work with MongoDB and the Aggregation Pipeline, remember that practice and experimentation are key to mastering this tool. Don’t hesitate to explore the MongoDB documentation for more advanced features and keep an eye on new developments in MongoDB releases.

Whether you’re building data-driven applications, performing business intelligence tasks, or tackling complex data analysis problems, the MongoDB Aggregation Pipeline offers a robust and scalable solution. By leveraging its capabilities effectively, you can unlock valuable insights from your data and drive informed decision-making in your projects and organizations.