Understanding the Role of Load Balancers in System Design

In the world of modern web applications and distributed systems, handling high traffic and ensuring optimal performance is crucial. As your application grows and attracts more users, a single server may no longer be sufficient to handle the increasing load. This is where load balancers come into play. In this comprehensive guide, we’ll explore the role of load balancers in system design, their importance, types, algorithms, and best practices for implementation.

What is a Load Balancer?

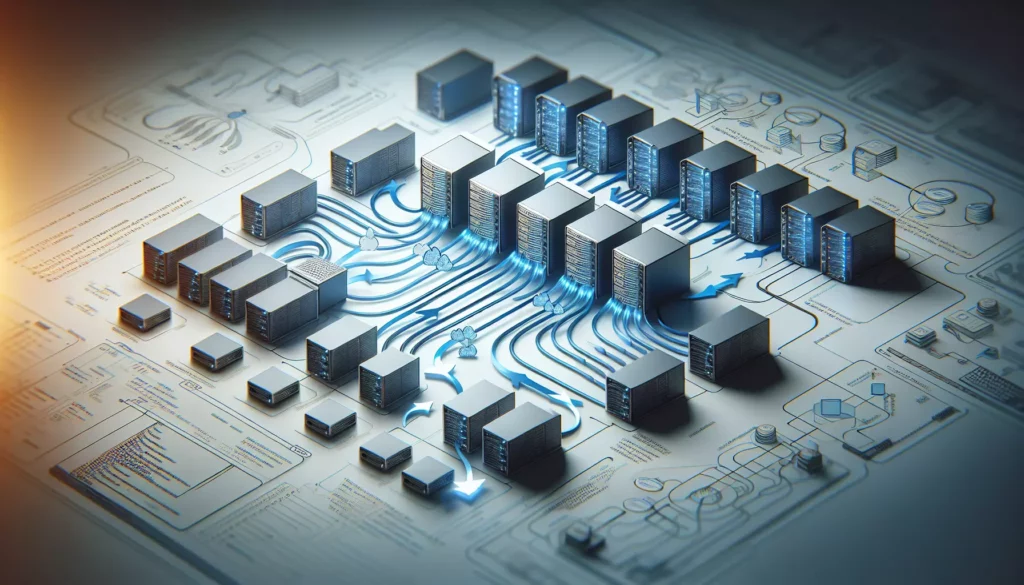

A load balancer is a critical component in distributed systems that acts as a traffic cop, distributing incoming network requests across multiple servers. Its primary purpose is to ensure that no single server becomes overwhelmed with too many requests, thereby improving the overall system’s reliability, scalability, and performance.

Load balancers can be implemented as hardware appliances, software applications, or cloud services. They sit between the clients (users or other services) and the backend servers, intelligently routing requests to the most appropriate server based on various factors and algorithms.

Why are Load Balancers Important?

Load balancers play a crucial role in modern system design for several reasons:

- High Availability: By distributing traffic across multiple servers, load balancers ensure that if one server fails, the others can still handle incoming requests, minimizing downtime.

- Scalability: As your application grows, you can easily add more servers to your backend pool, and the load balancer will automatically start routing traffic to the new servers.

- Performance: By evenly distributing requests, load balancers prevent any single server from becoming a bottleneck, ensuring faster response times for users.

- Flexibility: Load balancers allow you to perform maintenance or updates on individual servers without affecting the overall system’s availability.

- Security: Some load balancers can act as a first line of defense against DDoS attacks by filtering out malicious traffic.

Types of Load Balancers

Load balancers can be categorized based on the layer of the OSI (Open Systems Interconnection) model they operate on:

1. Layer 4 Load Balancers

Layer 4 load balancers work at the transport layer (TCP/UDP) of the OSI model. They make routing decisions based on network information such as IP addresses and port numbers, without inspecting the content of the packets.

Advantages:

- Fast and efficient as they don’t need to decrypt traffic

- Can handle any application protocol (HTTP, HTTPS, TCP, UDP)

- Lower latency compared to Layer 7 load balancers

Disadvantages:

- Limited ability to make routing decisions based on application-specific data

- Cannot perform content-based routing or SSL termination

2. Layer 7 Load Balancers

Layer 7 load balancers operate at the application layer of the OSI model. They can make routing decisions based on the content of the request, such as HTTP headers, cookies, or specific URLs.

Advantages:

- Can make more intelligent routing decisions based on application-specific data

- Ability to perform SSL termination, saving backend servers from the overhead of encryption/decryption

- Can handle content-based routing, allowing for more complex architectures

Disadvantages:

- Higher latency due to the need to inspect and process application-layer data

- More resource-intensive compared to Layer 4 load balancers

Load Balancing Algorithms

Load balancers use various algorithms to determine how to distribute incoming requests among the available servers. Here are some common algorithms:

1. Round Robin

This is one of the simplest algorithms where requests are distributed sequentially to each server in the pool. It’s easy to implement and works well when all servers have similar capabilities.

servers = ["server1", "server2", "server3"]

current_index = 0

def round_robin():

global current_index

server = servers[current_index]

current_index = (current_index + 1) % len(servers)

return server2. Least Connections

This algorithm routes new requests to the server with the least number of active connections. It’s useful when servers have varying capabilities or when requests have different processing times.

servers = {

"server1": 0,

"server2": 0,

"server3": 0

}

def least_connections():

return min(servers, key=servers.get)3. Weighted Round Robin

Similar to Round Robin, but each server is assigned a weight based on its capabilities. Servers with higher weights receive more requests.

servers = {

"server1": 3,

"server2": 2,

"server3": 1

}

def weighted_round_robin():

total_weight = sum(servers.values())

r = random.randint(1, total_weight)

for server, weight in servers.items():

if r <= weight:

return server

r -= weight4. IP Hash

This algorithm uses the client’s IP address to determine which server to route the request to. It ensures that requests from the same client always go to the same server, which can be useful for maintaining session data.

servers = ["server1", "server2", "server3"]

def ip_hash(client_ip):

hash_value = sum(ord(c) for c in client_ip)

return servers[hash_value % len(servers)]Implementing Load Balancing in Your System

When implementing load balancing in your system design, consider the following best practices:

1. Choose the Right Type of Load Balancer

Decide between Layer 4 and Layer 7 load balancers based on your application’s needs. If you need content-based routing or SSL termination, go for a Layer 7 load balancer. If raw performance is more important, a Layer 4 load balancer might be a better choice.

2. Select an Appropriate Algorithm

Choose a load balancing algorithm that suits your application’s characteristics. If all your servers have similar capabilities, Round Robin might be sufficient. If you have servers with varying capacities or need to maintain session affinity, consider Weighted Round Robin or IP Hash.

3. Health Checks

Implement health checks to ensure that the load balancer only routes traffic to healthy servers. This can be done through periodic ping tests or by checking specific endpoints on your application servers.

def health_check(server):

try:

response = requests.get(f"http://{server}/health", timeout=5)

return response.status_code == 200

except requests.RequestException:

return False

def get_healthy_servers():

return [server for server in servers if health_check(server)]4. SSL Termination

If you’re using HTTPS, consider implementing SSL termination at the load balancer level. This offloads the CPU-intensive task of encryption/decryption from your application servers, improving overall performance.

5. Session Persistence

If your application requires session persistence (e.g., for shopping carts or user authentication), implement sticky sessions. This ensures that requests from a particular client are always routed to the same server.

session_map = {}

def sticky_session(client_id):

if client_id in session_map:

return session_map[client_id]

else:

server = least_connections() # Or any other algorithm

session_map[client_id] = server

return server6. Monitoring and Logging

Implement robust monitoring and logging for your load balancer. This will help you identify performance bottlenecks, track the health of your servers, and troubleshoot issues when they arise.

7. Scalability

Design your load balancing solution to be scalable. This might involve using multiple load balancers in a hierarchical structure or leveraging cloud-based load balancing services that can automatically scale based on traffic patterns.

Load Balancing in Cloud Environments

Major cloud providers offer robust load balancing solutions that can be easily integrated into your system design:

Amazon Web Services (AWS)

AWS provides several load balancing options:

- Application Load Balancer (ALB): A Layer 7 load balancer for HTTP/HTTPS traffic

- Network Load Balancer (NLB): A Layer 4 load balancer for TCP/UDP traffic

- Classic Load Balancer: The original AWS load balancer, which can operate at both Layer 4 and Layer 7

Google Cloud Platform (GCP)

GCP offers the following load balancing solutions:

- HTTP(S) Load Balancing: A global Layer 7 load balancer for HTTP/HTTPS traffic

- Network Load Balancing: A regional Layer 4 load balancer for TCP/UDP traffic

- Internal Load Balancing: For load balancing traffic within your virtual network

Microsoft Azure

Azure provides these load balancing options:

- Azure Load Balancer: A Layer 4 load balancer for TCP/UDP traffic

- Application Gateway: A Layer 7 load balancer with additional features like SSL termination and Web Application Firewall

- Traffic Manager: A DNS-based traffic load balancer for routing traffic across Azure regions

Common Challenges and Solutions

While load balancers significantly improve system performance and reliability, they can also introduce some challenges:

1. Single Point of Failure

Challenge: If your load balancer fails, it can bring down your entire system.

Solution: Implement redundant load balancers in an active-passive or active-active configuration. Many cloud providers offer highly available load balancing solutions out of the box.

2. Session Persistence

Challenge: In stateful applications, routing requests from the same client to different servers can cause issues with user sessions.

Solution: Implement sticky sessions or move session data to a shared storage system like Redis or Memcached.

3. SSL/TLS Termination

Challenge: Handling SSL/TLS at the load balancer level can be resource-intensive and potentially expose decrypted traffic within your internal network.

Solution: Use dedicated SSL/TLS acceleration hardware or optimize your load balancer’s SSL configuration. Ensure that internal traffic is also encrypted if necessary.

4. Uneven Load Distribution

Challenge: Simple algorithms like Round Robin can lead to uneven load distribution if servers have different capacities or if requests have varying processing times.

Solution: Use more sophisticated algorithms like Least Connections or implement custom logic that takes into account server health and capacity.

Conclusion

Load balancers are a crucial component in modern system design, enabling applications to handle high traffic volumes, improve reliability, and scale effectively. By distributing incoming requests across multiple servers, load balancers ensure that no single server becomes a bottleneck, thereby enhancing overall system performance and user experience.

When implementing load balancing in your system, consider factors such as the type of load balancer (Layer 4 or Layer 7), the appropriate algorithm for your use case, and additional features like health checks and SSL termination. Cloud providers offer robust load balancing solutions that can be easily integrated into your architecture, simplifying the process of implementing and managing load balancers at scale.

As you progress in your coding journey and prepare for technical interviews, especially for major tech companies, understanding load balancers and their role in system design is crucial. This knowledge will help you design scalable and resilient systems capable of handling real-world traffic and challenges.

Remember, load balancing is just one aspect of system design. To build truly robust and scalable applications, you’ll need to consider other factors such as caching strategies, database design, and microservices architecture. Keep learning and practicing, and you’ll be well-prepared to tackle complex system design challenges in your future career.