The Evolution of Algorithms Through Computing History

In the vast landscape of computer science, few concepts have been as influential and enduring as algorithms. These step-by-step procedures for solving problems or performing tasks have been the backbone of computing since its inception. As we delve into the fascinating journey of algorithms through computing history, we’ll explore how these mathematical constructs have shaped the digital world we live in today.

The Birth of Algorithms: Ancient Roots

While we often associate algorithms with modern computing, their origins stretch back millennia. The term “algorithm” itself is derived from the name of the 9th-century Persian mathematician Muhammad ibn Musa al-Khwarizmi, whose works introduced algebraic concepts to the Western world.

However, the concept of algorithmic thinking predates even al-Khwarizmi. Ancient civilizations developed systematic procedures for tasks like arithmetic and geometry. The Babylonians, for instance, used algorithms for calculating square roots as early as 1600 BCE.

Early Mathematical Algorithms

Some of the earliest known algorithms include:

- The Euclidean algorithm for finding the greatest common divisor of two numbers (circa 300 BCE)

- The Sieve of Eratosthenes for finding prime numbers (circa 200 BCE)

- Various methods for approximating pi, developed across different cultures

These early algorithms laid the groundwork for the computational thinking that would become crucial in the digital age.

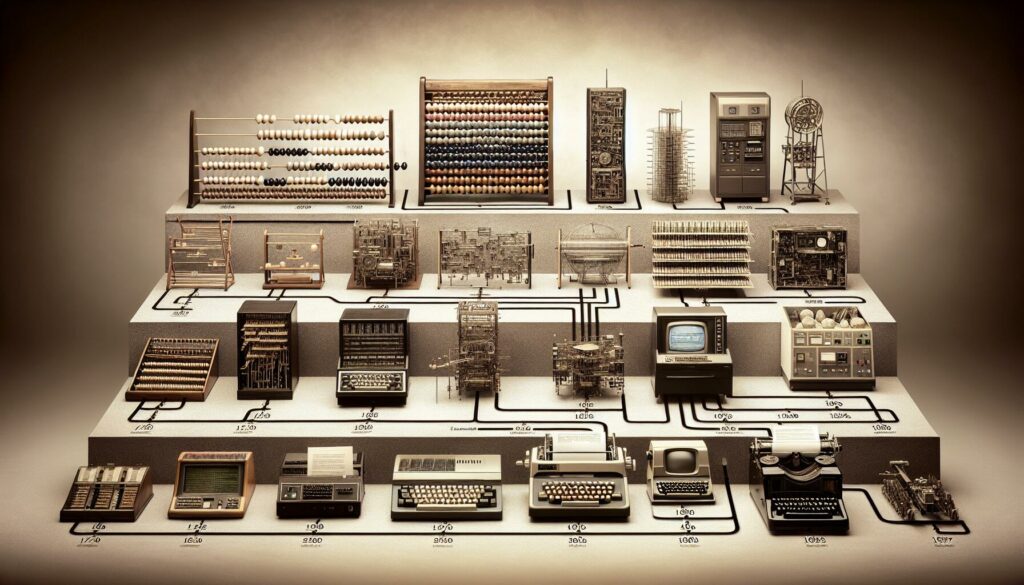

The Mechanical Era: Algorithms in Physical Form

As human society progressed, so did the complexity of the problems we sought to solve. The industrial revolution brought about a new era of mechanical computation, where algorithms began to take physical form in machines.

Charles Babbage and the Analytical Engine

In the 1830s, Charles Babbage conceived the Analytical Engine, a mechanical computer that, although never fully built in his lifetime, was designed to execute complex mathematical operations. This machine was to be programmed using punched cards, a concept borrowed from the Jacquard loom used in textile manufacturing.

Babbage’s collaborator, Ada Lovelace, is often credited with writing the first algorithm intended to be processed by a machine. Her notes on the Analytical Engine included a method for calculating Bernoulli numbers, which is considered by many to be the first computer program.

The Rise of Electromechanical Computers

The early 20th century saw the development of electromechanical computers, which could execute more complex algorithms at faster speeds. Notable examples include:

- The Harvard Mark I (1944), which could perform long computations automatically

- The Z3 (1941), created by Konrad Zuse, considered the first programmable computer

These machines implemented algorithms through a combination of mechanical and electrical components, paving the way for fully electronic computers.

The Electronic Age: Algorithms in the Digital Realm

The advent of electronic computers in the mid-20th century marked a turning point in the evolution of algorithms. With increased processing power and storage capabilities, more complex algorithms could be implemented and executed at unprecedented speeds.

ENIAC and the Birth of Modern Computing

The Electronic Numerical Integrator and Computer (ENIAC), completed in 1945, was one of the first general-purpose electronic computers. It could be reprogrammed to solve a wide variety of problems, making it a versatile platform for implementing diverse algorithms.

During this era, fundamental algorithms for tasks like sorting and searching began to emerge. Some classic algorithms developed during this time include:

- Merge Sort (1945) by John von Neumann

- Quicksort (1960) by Tony Hoare

- Binary Search (formalized in 1946, though the concept existed earlier)

The Emergence of Programming Languages

As computers became more sophisticated, high-level programming languages were developed to make it easier to write and implement algorithms. Some key milestones include:

- FORTRAN (1957), one of the first high-level programming languages

- LISP (1958), which introduced many ideas in computer programming, particularly in artificial intelligence

- ALGOL (1958), which had a profound influence on many subsequent programming languages

These languages allowed programmers to express algorithms in a more human-readable form, greatly accelerating the development of complex software systems.

The Information Age: Algorithms for Data Processing

As computers became more prevalent in business and scientific research, the need for efficient data processing algorithms grew. This period saw the development of many algorithms that are still fundamental to computer science today.

Database Algorithms

The growth of digital data led to the development of sophisticated database management systems. Key algorithms in this area include:

- B-tree algorithms (1970) for efficient data storage and retrieval

- Relational algebra operations for querying databases

- Indexing algorithms for faster data access

Graph Algorithms

Graph theory found numerous applications in computer science, leading to the development of important algorithms such as:

- Dijkstra’s algorithm (1956) for finding shortest paths in graphs

- The A* search algorithm (1968) for pathfinding and graph traversal

- PageRank (1996) for ranking web pages in search engine results

Cryptographic Algorithms

As digital communication became more prevalent, the need for secure data transmission led to advancements in cryptographic algorithms:

- DES (Data Encryption Standard, 1975)

- RSA (Rivest-Shamir-Adleman, 1977) for public-key cryptography

- AES (Advanced Encryption Standard, 2001)

The Era of Big Data and Machine Learning

The late 20th and early 21st centuries have seen an explosion in the amount of data being generated and processed. This has led to the development of new classes of algorithms designed to handle massive datasets and extract meaningful insights.

Big Data Algorithms

To process and analyze large volumes of data, new algorithmic approaches were needed:

- MapReduce (2004), a programming model for processing large datasets

- Streaming algorithms for processing data in real-time

- Approximate algorithms for handling datasets too large for exact computation

Machine Learning Algorithms

The field of machine learning has seen rapid growth, with algorithms that can learn from and make predictions or decisions based on data:

- Support Vector Machines (1990s) for classification and regression analysis

- Random Forests (2001) for ensemble learning

- Deep Learning algorithms, including Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs)

These algorithms have enabled breakthroughs in areas such as image recognition, natural language processing, and autonomous systems.

Quantum Algorithms: The Next Frontier

As we look to the future, quantum computing promises to revolutionize algorithmic thinking once again. Quantum algorithms exploit the principles of quantum mechanics to solve certain problems exponentially faster than classical algorithms.

Notable Quantum Algorithms

- Shor’s algorithm (1994) for integer factorization, which could potentially break many current cryptographic systems

- Grover’s algorithm (1996) for searching an unsorted database

- Quantum approximate optimization algorithm (QAOA) for combinatorial optimization problems

While still in their infancy, quantum algorithms represent the cutting edge of algorithmic development and may lead to breakthroughs in fields like cryptography, drug discovery, and financial modeling.

The Impact of Algorithms on Modern Society

As we’ve traced the evolution of algorithms through computing history, it’s clear that their impact extends far beyond the realm of computer science. Algorithms now play a crucial role in nearly every aspect of our digital lives.

Algorithms in Everyday Life

From the moment we wake up to when we go to sleep, algorithms are working behind the scenes:

- Recommendation systems suggest what we should watch, read, or buy

- Navigation apps use routing algorithms to guide us to our destinations

- Social media feeds are curated by complex ranking algorithms

- Financial transactions are processed and secured using cryptographic algorithms

Ethical Considerations

The pervasiveness of algorithms in decision-making systems has raised important ethical questions:

- Bias in machine learning algorithms can perpetuate or exacerbate social inequalities

- Privacy concerns arise from algorithms that process personal data

- The “black box” nature of some complex algorithms makes it difficult to understand or challenge their decisions

As algorithms continue to evolve and become more integrated into our lives, addressing these ethical concerns will be crucial.

The Future of Algorithmic Development

Looking ahead, several trends are likely to shape the future of algorithms:

Explainable AI

There’s a growing emphasis on developing algorithms that can not only make decisions but also explain the reasoning behind those decisions. This is particularly important in fields like healthcare and finance, where transparency is crucial.

Edge Computing

As IoT devices become more prevalent, there’s a need for algorithms that can process data efficiently on resource-constrained devices, rather than relying on cloud computing.

Quantum-Inspired Algorithms

Even as we wait for practical quantum computers, insights from quantum algorithms are inspiring new classical algorithms that offer improved performance for certain problems.

Neuromorphic Computing

Algorithms inspired by the structure and function of the human brain are being developed, potentially leading to more efficient and adaptable AI systems.

Conclusion: The Ongoing Algorithmic Revolution

From ancient mathematical procedures to quantum computing, the evolution of algorithms has been a journey of continuous innovation and adaptation. As we’ve seen, algorithms have not only reflected the technological capabilities of their time but have also driven advancements in computing and shaped our digital world.

The story of algorithms is far from over. As we face new challenges in areas like climate change, healthcare, and space exploration, algorithms will undoubtedly play a crucial role in finding solutions. The ability to think algorithmically – to break down complex problems into step-by-step procedures – remains as valuable as ever.

For aspiring programmers and computer scientists, understanding this rich history provides context for current practices and inspiration for future innovations. Platforms like AlgoCademy play a vital role in this ecosystem, offering interactive coding tutorials and resources that help learners develop algorithmic thinking skills and prepare for the challenges of modern software development.

As we stand on the shoulders of algorithmic giants, from al-Khwarizmi to the pioneers of quantum computing, we can look forward to a future where algorithms continue to push the boundaries of what’s possible, solving ever more complex problems and opening up new frontiers in human knowledge and capability.